You can also examine a histogram of the residuals it should be approximately normally distributed.

Check this assumption by examining a normal probability plot the observations should be near the line. Normality of errors: The residuals must be approximately normally distributed.Check this assumption by examining a scatterplot of “residuals versus fits.” The correlation should be approximately 0. Independence of errors: There is not a relationship between the residuals and the predicted values.

Check this assumption by examining a scatterplot of \(x\) and \(y\).

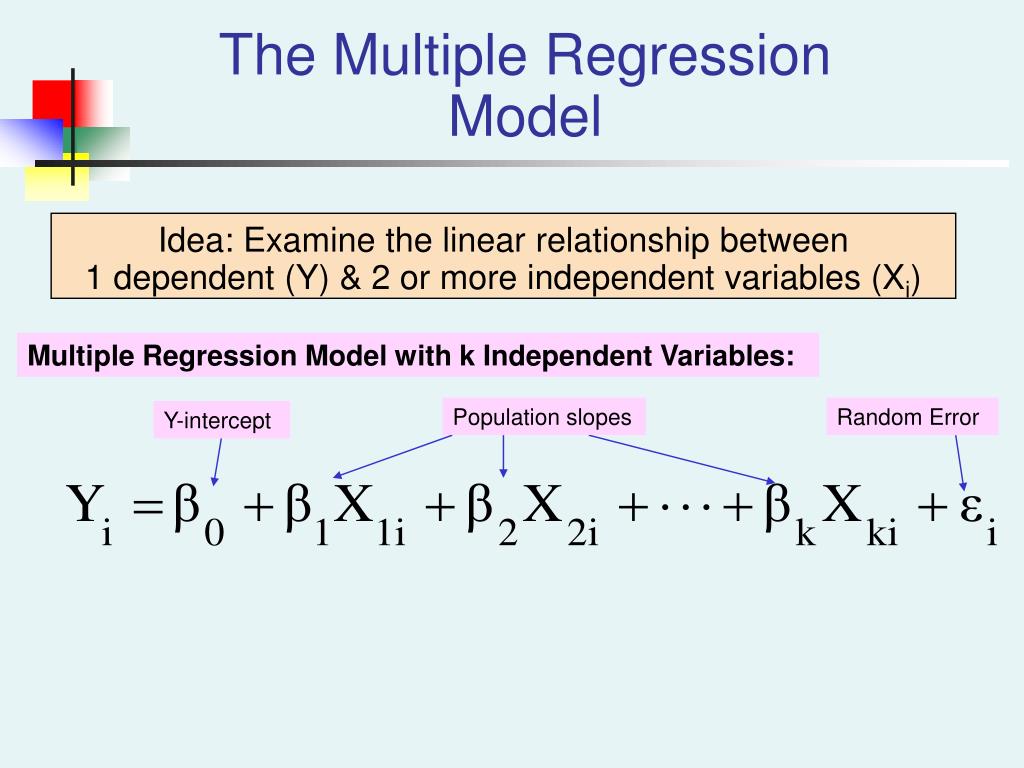

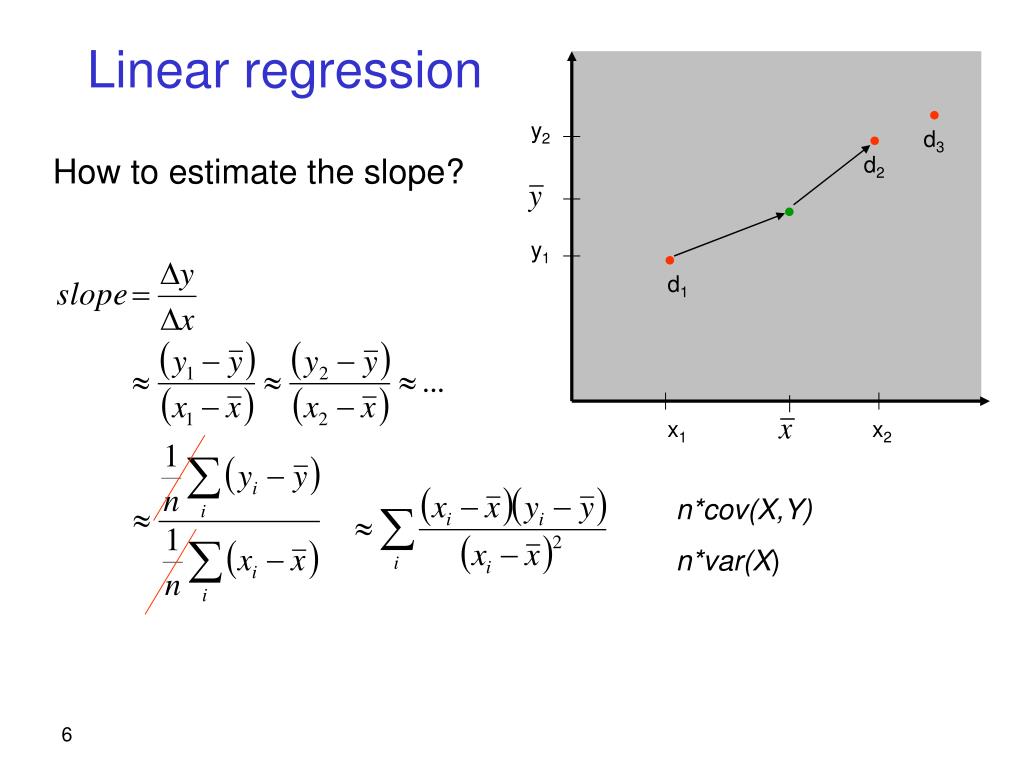

A positive slope indicates a line moving from the bottom left to top right. The slope is a measure of how steep the line is in algebra this is sometimes described as "change in \(y\) over change in \(x\)," or "rise over run". You may recall from an algebra class that the formula for a straight line is \(y=mx+b\), where \(m\) is the slope and \(b\) is the \(y\)-intercept. The "linear" part is that we will be using a straight line to predict the response variable using the explanatory variable. If there are two or more explanatory variables, then multiple linear regression is necessary. The "simple" part is that we will be using only one explanatory variable.

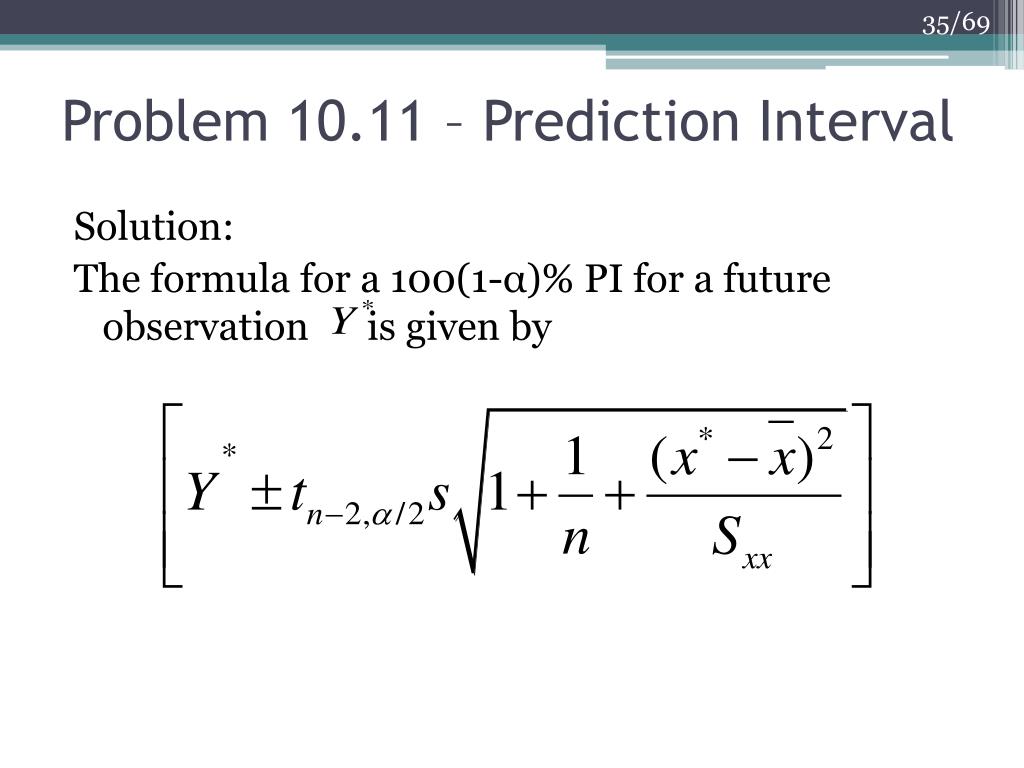

In this lesson we will be learning specifically about simple linear regression. The function that we want to optimize is unbounded and convex so we would also use a gradient method in practice if need be.Recall from Lesson 3, regression uses one or more explanatory variables (\(x\)) to predict one response variable (\(y\)). Another way to find the optimal values for $\beta$ in this situation is to use a gradient descent type of method. This might give numerical accuracy issues. $\frac$ is very hard to calculate if the matrix $X$ is very very large. Starting from $y= Xb +\epsilon $, which really is just the same as

0 kommentar(er)

0 kommentar(er)